Essential Things You Need to Know About the Google Play Experiments Tool

The Google Play Experiments Tool is among the most effective ways to increase your app’s conversion rate. However, there are still marketers who are new to this tool. Some marketers also don’t know that there are new updates that affect the performance of their experiments.

In this post, let’s take a look at how Google Play Experiments work, the recent updates you need to know about, and other important details to ensure you get your app working optimally.

Continue reading to learn more about Google Play Store Listing Experiments.

Contents

- What are app listing experiments

- What are the benefits of app listing experiments

- What tests can you do with listing experiments

- How to run experiments on Google Play

- Best practices in conducting listing experiments

- Which changes were made to Google Play Store listing experiments

- What to remember when conducting Google Play Experiments

What are app listing experiments

Google Play Store allows you to perform experiments to determine the best way to design your app page. Experiments involve A/B testing where you can compare various versions of your store listing page and identify which of those versions lead to the best results.

What are the benefits of app listing experiments

Running experiments in the Google Play Store provides many invaluable benefits, which include the following:

- Identify the best graphics and texts that you should include in your app listing.

- Increase app installs and user retention.

- Learn more about various groups of your users based on their language and locality.

- Gain more insights into your users’ behavior.

According to ShyftUp, you can better take advantage of the app listing experiments when you combine them with other App Search Optimization campaigns and strategies.

What tests can you do with listing experiments

As mentioned above, Google experiments are primarily conducted with A/B testing.

There are two types of experiments that you can run on Google: the global and localized.

With global, you can A/B test your graphics in your app’s default language. You can test your app’s promo video, icon, graphic, and screenshots. All global users are included in the experiment.

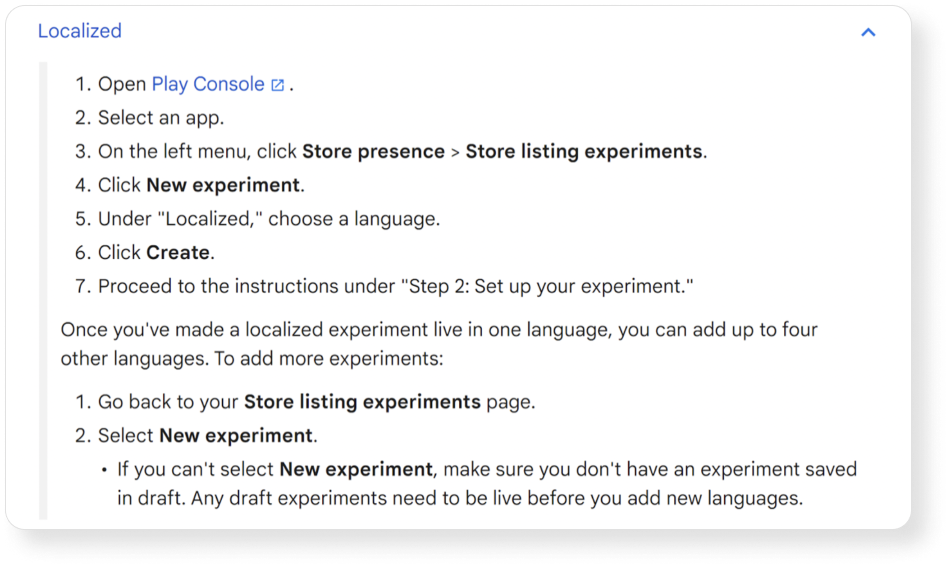

On the other hand, localized can test not only graphics but also text. You can test in up to five languages.

How to run experiments on Google Play

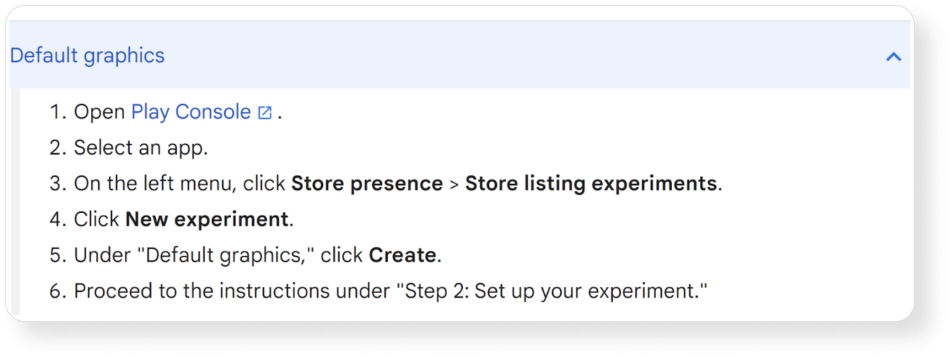

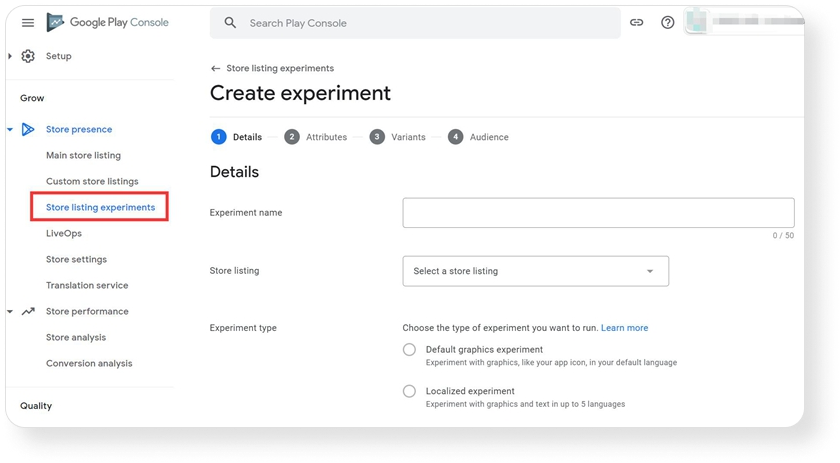

You conduct your experiments in the Google Play Console.

Go to Store listing experiments and from there, you can then set up your test. Follow the on-screen instructions and your test should be up and running.

Once you’re done with your test, you can review and apply results based on your findings.

Best practices in conducting listing experiments

The Google Play experiments are a powerful tool to help you improve your app’s performance. However, to ensure you fully take advantage of this tool, you need to remember the following:

- The best way to take advantage of store listing experiments is by testing app icons, screenshots, and videos.

- If you want to obtain the most accurate results, consider testing one app page element at a time.

- Google advises that you run your experiment for at least seven days. This will also help you measure data coming from both weekends and weekdays.

- Regularly perform experiments and revisit your assets to ensure your app page is still designed optimally for changes that may occur over time.

- Be sure to turn on email notifications for your experiments. This ensures that you will be regularly informed as soon as new updates are available with regard to your test.

Which changes were made to Google Play Store listing experiments

On December 7, 2021, Apple announced that it will release the new Product Page Optimization (PPO) feature for its developers together with iOS 15.

The PPO feature is somewhat similar to the store listing experiments, which Google already made available to its app developers many years ago.

As a response to the PPO of Apple, Google has made some improvements and changes to its store experiments in 2022. The creation of experiments now comes with three steps:

- Store listing

- Experiment goals

- Variant configuration

Let’s go through each of them in more detail.

Store listing

Under the store listing, you will be able to configure which specific app you want to involve in your experiment.

Aside from that, you would then choose the name of your test, the experiment type, and whether it would be “global” or “localized”.

Experiment goals

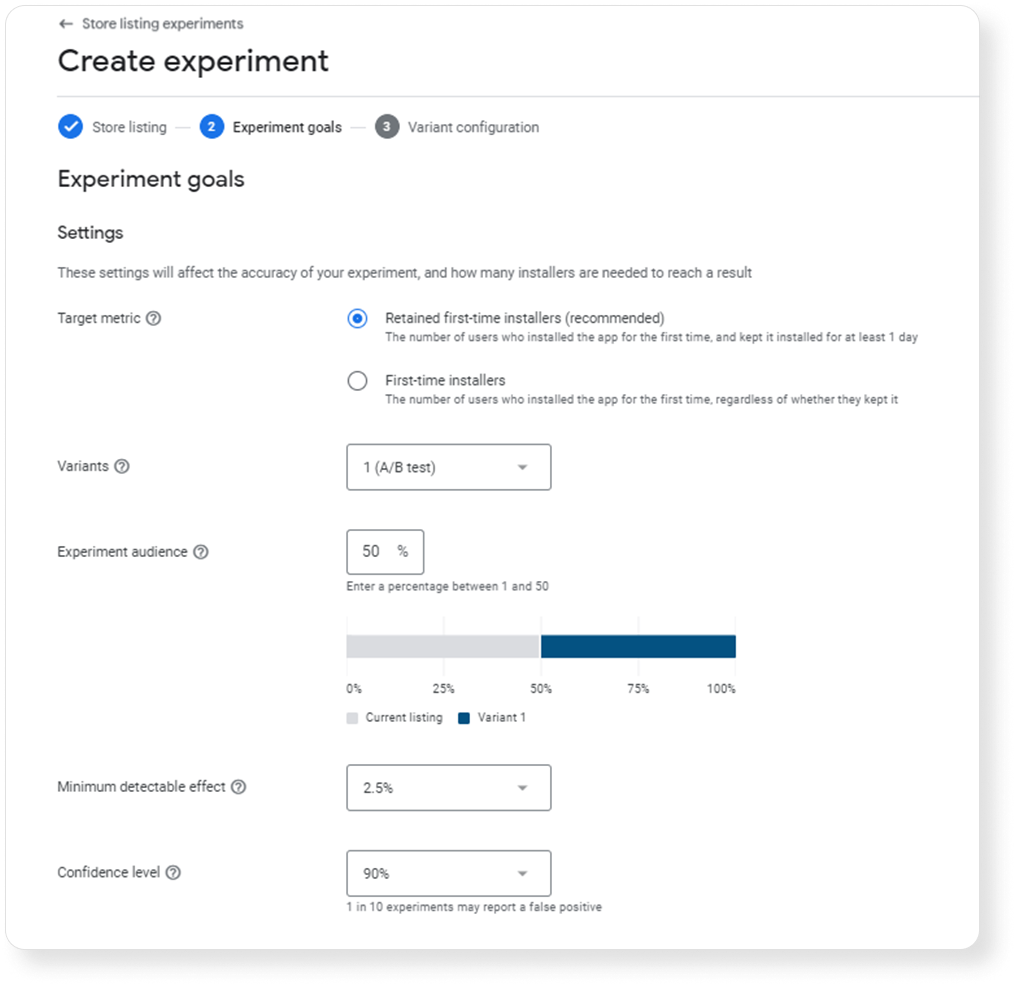

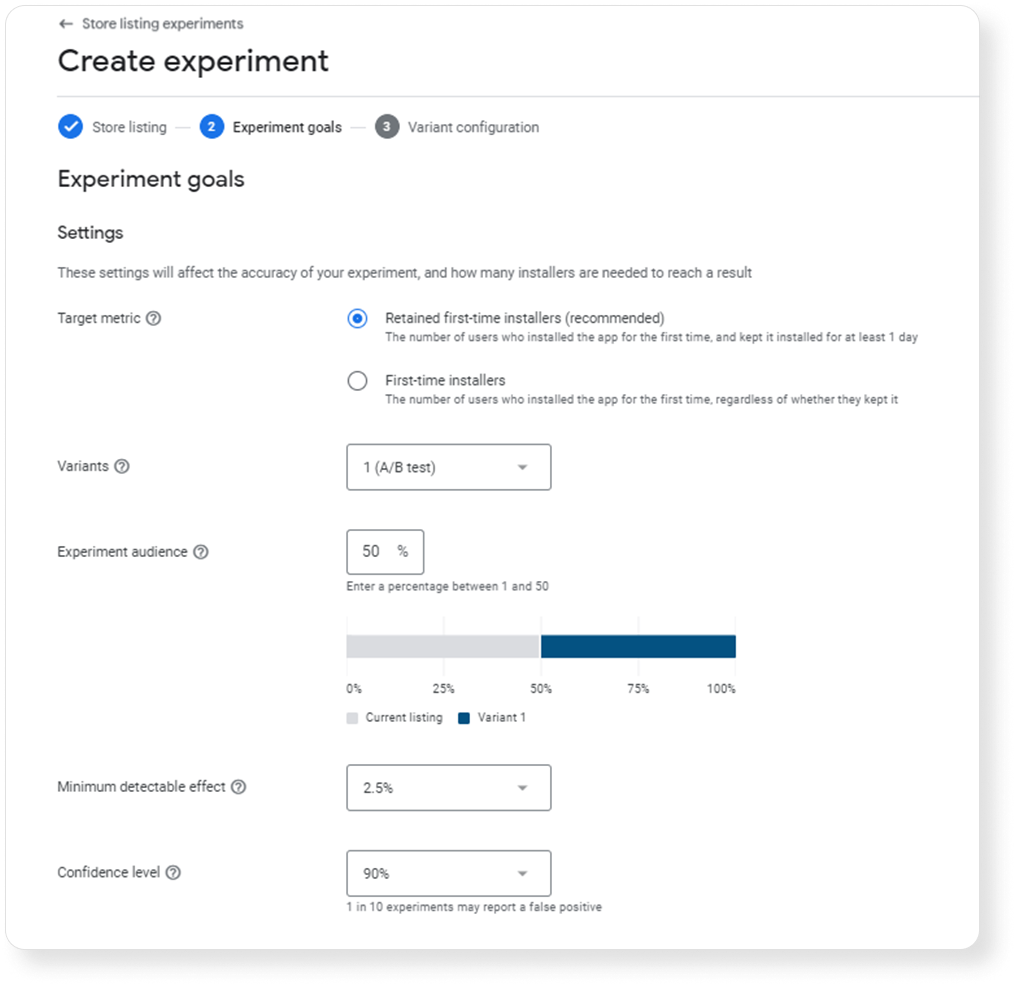

Among the three, step number two has seen the biggest changes. Google gave this aspect a bigger focus — and rightly so.

Here are some of the most important updates you’ll find under the Experiment goals step:

Target metric

In the previous version of the experiments, you can only specify the percentage of your users who will be included in the test. Now, you can add more specific details.

Under the Target metric, you can choose between the two:

- Retained first-time installers — this is the default and recommended setting. It allows you to measure the number of users who downloaded your app and kept it for at least a day.

- First-time installers — you would choose this setting if you wanted to gauge the number of users who installed your app regardless of whether they installed it for a day or not.

Variants

The variants are simpler. You can choose up to five variants. While it is good to test as many variants as possible, experts recommend you only test one variant at a time to get a more conclusive result.

Experiment audience

You can choose a percentage between 1 and 50. This is the size of the available users who would be included in the test. It is highly recommended that you include 50% of your audience in the experiment to get more accurate data. The larger the sample size, the better.

Minimum detectable effect

With this feature, you can specify the necessary percentage of the minimum difference between control and variants to determine the winner of your test.

You can choose between 0.5% to 3.0%. The lower the percentage, the more users you need to complete your test.

Confidence level

As its name implies, the confidence level tells Google how much confidence you want to have in your test results. Obviously, the higher the confidence level, the better.

You can choose between 90% and 99%. While it is preferable to have a higher confidence level, if you ever choose 99%, your test would run longer than usual.

Nevertheless, having a high confidence level would lower the chance of getting a false positive.

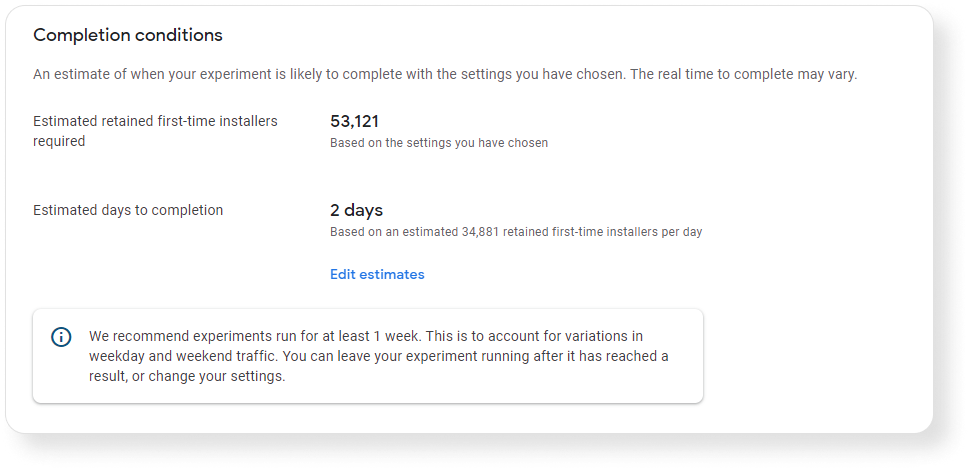

Variant configuration

In the third step, Variant configuration, you’ll be able to see and determine the duration of your experiment.

As seen on the screenshot above, you’ll see the “Estimated retained first-time installers” or “First-time installers” required, depending on which target metric you chose.

You’ll also find the “Estimated days to completion,” which is based on the number of your installers.

Those were the most significant changes made in the Google Play Experiments tool.

The new updates increase your level of control over how the test runs. Furthermore, the changes made by Google will lead to more statistically accurate and reliable results.

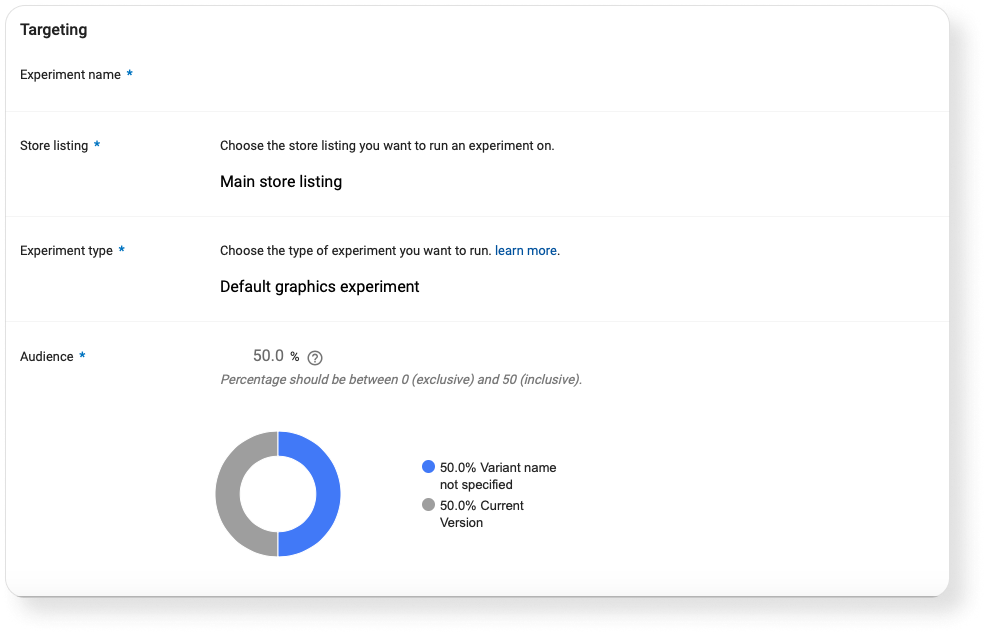

This is what setting up experiments looked like in the past:

Since changes were introduced, the setup looks like this:

What to remember when conducting Google Play Experiments

Google Play Store listing experiments are highly valuable in identifying the best variants of your app page. To ensure you run an effective Google Play experiment, keep the following in mind:

- Focus your experiments on screenshots, icons, and videos. The visual elements of your app product page play a major role in your conversion rate.

- Run your experiments for at least one week

- Opt to test one asset at a time

- Subscribe to email notifications to receive updates about your experiments

- When you run your experiments, you’ll receive a lot of data. You can combine the data with user acquisition tools from ShyftUp.

Google’s experiments can perform A/B testing, but these are not enough. With ShyftUp, you can run app store optimization services with ease, leading to better app visibility, conversion rates, and ultimately, income.